In the mid 1960s, Joseph Weizenbaum of the MIT Artificial Intelligence Laboratory created ELIZA, an early natural language processing computer program and the first chatbot therapist. While ELIZA did not change therapy forever, it was a major step forward and one of the first programs capable of taking the Turing Test. Researchers were surprised by the amount of people who attributed human-like feelings to the computer’s responses.

Fast-forward 50 years later, advancements in artificial intelligence and natural language processing enable chatbots to become useful in a number of scenarios. Interest in chatbots has increased by 500% in the past 10 years and the market size is expected reach $1.3 billion by 2025.

Chatbots are becoming commonplace in marketing, customer service, real estate, finance, and more. Healthcare is one of the top 5 industries where chatbots are expected to make an impact. This week, we explore why chatbots appeal to help healthcare providers run a more efficient operation.

SCALABILITY

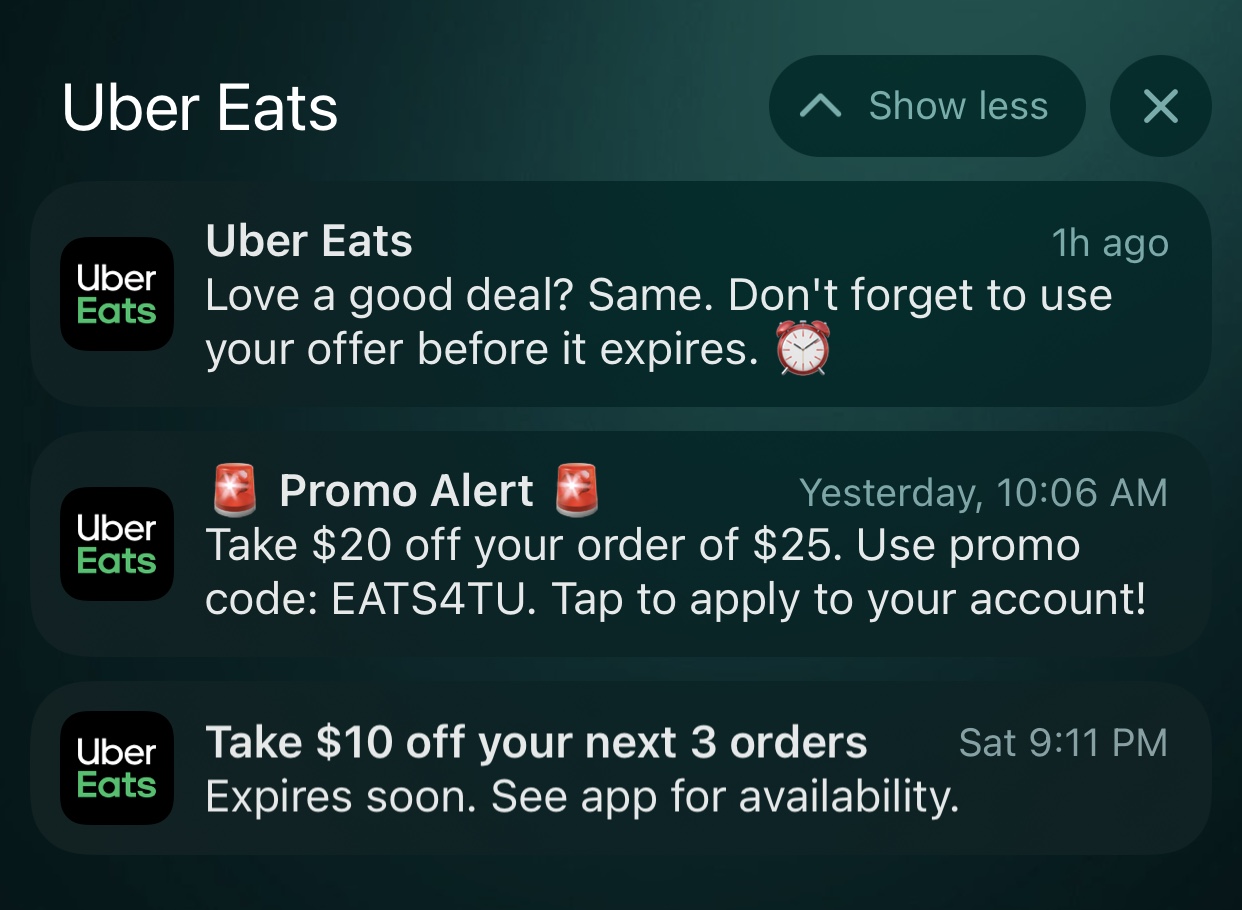

Chatbots can interact with a large number of users instantly. Their scalability equips them to handle logistical problems with ease. For example, chatbots can make mundane tasks such as scheduling easier by asking basic questions to understand a user’s health issues, matching them with doctors based on available time slots, and integrating with both doctor and patient calendars to create an appointment.

At the onset of the pandemic, Intermountain Healthcare was receiving an overload of inquiries from people who were afraid they may have contracted Covid-19. In order to facilitate the inquiries, Intermountain added extra staff and a dedicated line to their call center, but it wasn’t enough. Ultimately, they turned to artificial intelligence in the form of Scout, a conversational chatbot made by Gyant, to facilitate a basic coronavirus screening which determined if patients were eligible to get tested at a time when the number of tests were limited.

Ultimately, Scout only had to ask very basic questions—but it handled the bevy of inquiries with ease. Chatbots have proved themselves to be particularly useful for understaffed healthcare providers. As they employ AI to learn from previous interactions, they will become more sophisticated which will enable them to take on more robust tasks.

ACCESS

Visiting a doctor can be challenging due to the considerable amount of time it takes to commute. Working people and those without access to reliable transport may prevent them from taking on the hassle of the trip. Chatbots and telehealth in general provide a straightforward solution to these issues, enabling patients to receive insight as to whether an in-person consultation will be necessary.

While chatbots cannot provide medical insight and prognoses, they are effective in collecting and encouraging an awareness of basic data, such as anxiety and weight changes. They can help effectively triage patients through preliminary stages using automated queries and store information which doctors can later reference with ease. Their ability to proliferate information and handle questions will only increase as natural language processing improves.

A PERSONALIZED APPROACH — TO AN EXTENT

Chatbot therapists have come a long way since ELIZA. Developments in NLP, machine learning, and more enable chatbots to deliver helpful, personalized responses to user messages. Chatbots like Woebot are trained to employ cognitive-behavioral therapy (CBT) to aid patients suffering from emotional distress by offering prompts and exercises for reflection. The anonymity of chatbots can help encourage patients to provide more candid answers unafraid of human judgment.

However, chatbots have yet to achieve one of the most important features a medical provider should have: empathy. Each individual is different, some may be scared away by formal talk and prefer casual conversation while for others, formality may be of the utmost importance. Given the delicacy of health matters, a lack of human sensitivity is a major flaw.

While chatbots can help manage a number of logistical tasks to make life easier for patients and providers, their application will be limited until they can gauge people’s tone and understand context. If recent advances in NLP and AI serve any indication, that time is soon to come.