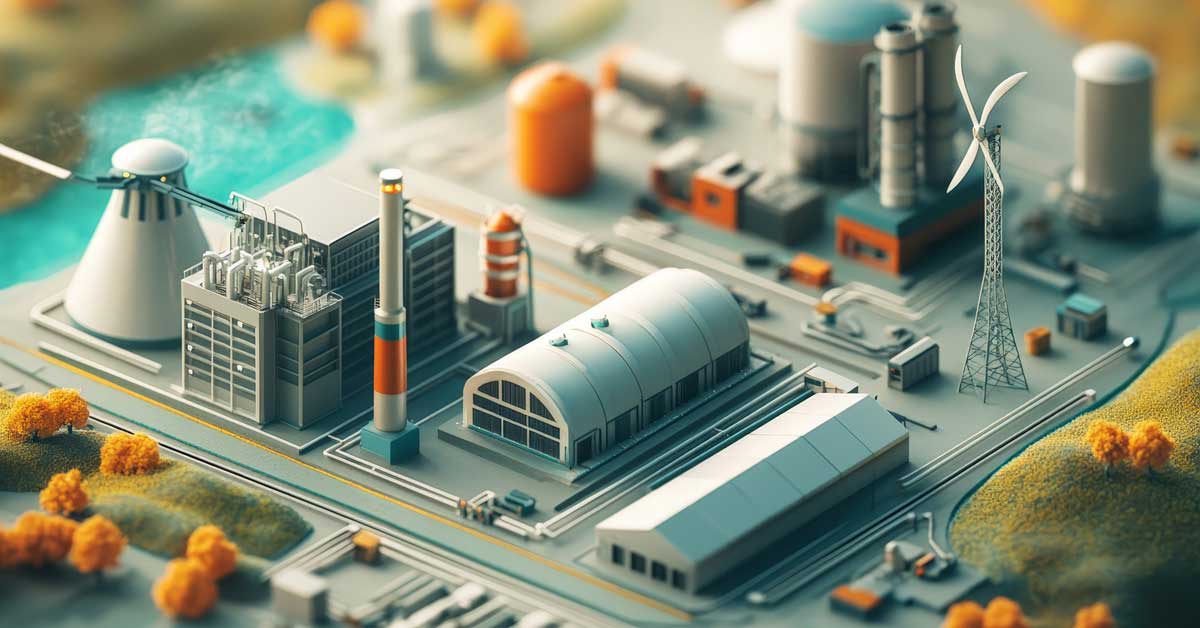

In a world increasingly conscious of its ecological footprint, the drive towards sustainable energy solutions is more critical than ever. Understanding the intersection of sustainable technology and renewable energy is key to pioneering a greener future. This blog explores how sustainable tech solutions are transforming the landscape of renewable energy, providing businesses with innovative ways to reduce their carbon footprint and enhance efficiency. By embracing these advancements, organizations can make meaningful strides toward long-term sustainability and resilience.

Powering the Future

The basics of renewable energy sources like solar, wind, and hydro, set the stage for why sustainable energy is essential in our shift away from fossil fuels. With the growing global demand for energy, shifting to renewable sources is not just a choice; it’s a necessity. You’ll discover the impacts of sustainable tech solutions in renewable energy, balanced insights into their pros and cons, and what the future holds for these technologies. Let’s dig deep into this vital topic and see how we can leverage these innovations for a sustainable tomorrow.

The Impact of Sustainable Tech on Renewable Energy

Innovative tech solutions are transforming the renewable energy sector, enhancing efficiency, affordability, and accessibility. These advancements enable us to better harness natural resources like wind, solar, and hydropower, significantly lowering environmental impact while maximizing energy production. They play a pivotal role in reducing dependence on fossil fuels and supporting a sustainable response to global energy demands.

One of the significant effects is the reduction in greenhouse gas emissions. By integrating sustainable tech solutions, businesses can significantly lower their carbon footprint, contributing to cleaner air and a healthier planet. For technology executives, this means not just complying with environmental regulations but leading the way in corporate social responsibility.

Beyond environmental benefits, these solutions offer economic advantages. Advancements in technology have led to a decrease in the costs associated with renewable energy production, making it more competitive with traditional energy sources. This economic shift makes sustainable tech an attractive investment for businesses looking to align their operations with environmentally friendly practices.

Breaking Down the Benefits

Adopting sustainable tech solutions in renewable energy presents numerous benefits, from environmental impacts to economic savings. For businesses, these solutions can lead to substantial cost reductions over time, as renewable energy typically results in lower operational expenses compared to traditional energy sources.

The environmental benefits are significant. Sustainable tech solutions help mitigate climate change by reducing carbon emissions, conserving water resources, and decreasing the reliance on depleting resources. This aligns with global sustainability goals and reinforces a brand’s reputation as environmentally conscious.

In addition, these technologies often come with government incentives and tax breaks aimed at promoting green energy adoption. This financial boost can be a compelling reason for CTOs and CFOs to consider integrating them into their existing operations. By doing so, companies not only improve their bottom line but also contribute positively to the community and environment at large.

Expanding the Benefits of Sustainable Tech in Renewable Energy

The advantages of adopting sustainable tech solutions extend far beyond cost savings and environmental impacts—they encapsulate a strategic transformation for businesses positioned for future growth. By shifting towards renewable energy, companies can diversify their energy portfolios, reducing reliance on finite, fossil-based fuels. As the supply of traditional fuel sources diminishes, leading to increased extraction costs, businesses adopting renewable energy can avoid these escalating expenses. The stable pricing of renewables offers a less volatile energy cost structure, allowing for more accurate and reliable financial forecasting. This financial predictability is crucial in enabling firms to allocate their resources more effectively towards innovation and expansion, ultimately fostering long-term economic growth.

Furthermore, investing in sustainable technologies positions businesses as leaders in innovation and sustainability, reinforcing their brand reputation and competitive edge. As awareness grows about the detrimental impacts of climate change, consumers and stakeholders are increasingly turning their support towards companies that demonstrate a steadfast commitment to environmental stewardship. By showcasing a proactive approach to sustainability, businesses not only enhance their public image but also attract environmentally conscious customers and investors. Additionally, these efforts can facilitate strategic partnerships with other like-minded organizations, leading to collaborations that drive further advancements in sustainable practices. Embracing this forward-thinking approach solidifies a company’s role as a pioneer in the evolving landscape of global energy solutions.

Challenges and Considerations

While the path to adopting sustainable technology in renewable energy is paved with numerous benefits, it comes with its own set of formidable challenges. Key among these is the considerable initial investment required. Though the promise of long-term savings is a compelling incentive, the hefty upfront costs can present a major financial hurdle, particularly for small and mid-sized enterprises. Businesses must carefully weigh the initial expenditure against the potential for future returns, a task that requires not only financial foresight but also strategic planning.

Infrastructure modification presents another significant challenge. Transitioning to renewable energy solutions often demands substantial overhauls of existing energy systems. This can be a daunting undertaking, necessitating both time and financial resources to reconfigure or upgrade infrastructure to accommodate new technologies. These projects are not only capital-intensive but can also disrupt daily operations, thereby requiring careful planning and execution.

Moreover, the inherent intermittency of renewable energy sources like wind and solar power demands sophisticated energy storage solutions to maintain a consistent energy supply. This challenge calls for the development and integration of advanced storage technologies, which can reliably harness and distribute power even when natural resources ebb. The refinement of these storage solutions is critical for ensuring energy stability and is an area where continual innovation is needed.

Furthermore, the integration of sustainable tech solutions introduces a level of technological complexity that can be daunting for many organizations. This complexity necessitates a well-trained workforce, equipped with the knowledge and skills to manage and operate these advanced systems. Small businesses, in particular, may find it challenging to recruit, train, and retain the necessary personnel, adding another layer of difficulty to the adoption process. The need for specialized knowledge and skills underscores the importance of investing in workforce training and development as part of the transition strategy. Deploying effective educational programs can empower teams to manage these technologies efficiently, unlocking their full potential and ensuring long-term success in a rapidly evolving landscape.

Pros of Sustainable Tech Solutions

Environmental Impact: Sustainable tech solutions offer a significant reduction in carbon emissions and pollution. By utilizing renewable energy sources such as solar, wind, and hydropower, businesses can contribute to a cleaner and healthier planet. This shift not only helps mitigate the adverse effects of climate change but also supports global efforts to preserve natural ecosystems.

Long-term Cost Savings: Although the initial investment may be substantial, sustainable tech solutions promise considerable savings in the long run. Lower operational costs, coupled with potential government incentives and tax breaks, can lead to financial benefits that outpace those offered by traditional energy sources. Businesses can turn these savings into reinvestments for further sustainability initiatives or operational improvements.

Brand Reputation: Embracing sustainable tech solutions enhances corporate social responsibility and improves public image. Businesses that prioritize environmental stewardship are often viewed more favorably by consumers, stakeholders, and investors. This improved reputation can lead to increased customer loyalty, better brand recognition, and a competitive edge in an increasingly eco-conscious market.

Cons of Sustainable Tech Solutions

Initial Costs: One of the primary drawbacks is the high upfront capital investment required to implement sustainable technologies. The financial outlay can be a significant barrier for businesses, particularly for those with limited budgets. It’s crucial for companies to explore financing options or partnerships that can help alleviate initial financial burdens.

Infrastructure Needs: Integrating sustainable technologies often requires significant modifications to existing systems and infrastructure. This transition can be both time-consuming and expensive, potentially disrupting current operations. Businesses must carefully plan and strategize these changes, ensuring they have the necessary resources and support to manage the transition effectively.

Complexity and Maintenance: Deploying sustainable tech solutions involves technological complexity that requires specialized knowledge and ongoing maintenance. This can present challenges, especially for smaller businesses lacking the resources to hire skilled personnel or provide continuous training. Ensuring the efficient operation of these technologies demands a commitment to maintaining expertise and updating systems as needed.

Balancing these pros and cons is essential for businesses considering the shift to sustainable tech solutions. The decision should be based on a thorough analysis of current operations, budget constraints, and long-term sustainability goals. By weighing the potential benefits against the challenges, organizations can make informed choices that align with their strategic vision for the future.

Future Directions in Sustainable Technology

As we look ahead, the future of sustainable tech solutions in renewable energy appears promising. Technological advances are expected to continue driving down costs and improving efficiency, making renewable energy even more accessible. Innovations in energy storage, such as advanced battery technologies, will play a crucial role in overcoming the challenges of intermittency in renewable sources.

Furthermore, the integration of artificial intelligence and machine learning can optimize energy consumption, ensuring maximum efficiency and minimal waste. These technologies can predict energy demand patterns and adjust supply accordingly, reducing energy loss and enhancing system reliability.

Collaboration will be key in this evolving landscape. Industry partnerships and government support will be instrumental in scaling up renewable energy projects, fostering innovation, and creating a regulatory environment that encourages sustainable practices. Business leaders have an opportunity to not only adapt to these changes but to drive the market towards a more sustainable future.

Conclusion

Undoubtedly, sustainable technology solutions are poised to revolutionize the renewable energy sector. By adopting these innovative technologies, businesses have the opportunity to substantially minimize their environmental footprint while also reaping economic rewards. The integration of sustainable technologies allows for a decrease in carbon emissions and enhances the overall efficiency of operations, contributing to a more sustainable planet. Nevertheless, it is essential to navigate the associated complexities and costs with careful consideration and strategic foresight. Companies must evaluate the long-term benefits against the initial investments and operational challenges to ensure a smooth transition.

As we delve deeper into how sustainable tech can reshape industries, our subsequent blog will explore the dynamic interplay between sustainable technologies and energy efficiency. This upcoming discussion will shed light on how these advancements can optimize business operations further, drive sustainability efforts, and ultimately, bolster profitability. Meanwhile, organizations should start contemplating the incorporation of sustainable tech to transition towards a more eco-friendly and economically advantageous future. By doing so, they can not only enhance their corporate reputation but also contribute to a more resilient and sustainable global economy. Stay tuned to discover the myriad ways these technological advancements can elevate your business performance and environmental commitment.