In the world of energy, Virtual Power Plants (VPP) are poised to revolutionize the traditional energy market. With the integration of Machine Learning (ML) technology, VPPs are able to analyze data in real time and make intelligent decisions that will ensure efficient energy distribution while reducing costs. In this blog post, we’ll explore the effects of Machine Learning in Virtual Power Plants and dive into examples of companies that are already adopting this new technology.

As the demand for electricity continues to increase, traditional power plants are struggling to keep up. With aging infrastructure and a growing focus on renewable energy, it has become increasingly challenging to meet the demands of consumers while maintaining reliability and affordability. This is where Virtual Power Plants powered by Machine Learning come in. With ML algorithms, VPPs are able to predict energy production and consumption patterns, allowing for more accurate and efficient energy distribution. In addition, ML can also optimize the use of renewable energy sources, such as solar panels or wind turbines, by predicting when they will produce the most power.

Improved Reliability

Since VPPs are designed to work with multiple sources of renewable energy, the smart algorithms will ensure that the energy is distributed evenly, and the system can respond to any issues. With real-time data analysis, any occurrence of a failing energy supply can quickly be identified and addressed. With the integration of Machine Learning, VPPs can predict when the energy supply will fall short and make necessary changes automatically. This level of reliability is crucial for the stability of the energy grid and ensures a consistent supply of power to consumers.

Enhanced Efficiency

Virtual Power Plants improve energy distribution efficiency, which is particularly useful for peak times or sudden surges in power demand. ML will monitor real-time energy demand and supply, and make corrections to power distribution to ensure that the system remains in balance and there are no overloads or outages. With the use of ML, VPPs can optimize energy distribution processes while reducing energy wastage and preventing unnecessary energy costs.

Flexibility

As we pointed out earlier, Virtual Power Plants enabled with Machine Learning capabilities are highly responsive and have shown to be adaptable to changing energy demands. The intelligent system can monitor demand changes, weather patterns, and other factors and make adjustments accordingly. By predicting the energy needed the VPP can send the correct amount of energy exactly when and where it’s required. This kind of adaptability ensures that resources are not wasted, and the infrastructure can be utilized to its maximum potential.

Cost Reductions

By optimizing energy distribution, the system will reduce the number of fossil fuel-based power plants required to produce energy, resulting in reduced CO2 emissions and costs. By predicting the amount of renewable energy supply available and ensuring it is used efficiently, enables VPPs to operate on a significantly lower budget. By utilizing ML algorithms, VPPs are capable of not only predicting energy production and consumption patterns but also optimizing the use of renewable resources. This optimization occurs when the ML algorithm forecasts the periods of maximum energy output from renewable sources like solar panels and wind turbines. By harnessing energy during these peak periods, VPPs can store and distribute power when the demand is high, thereby reducing reliance on costly non-renewable sources.

The Impacts!

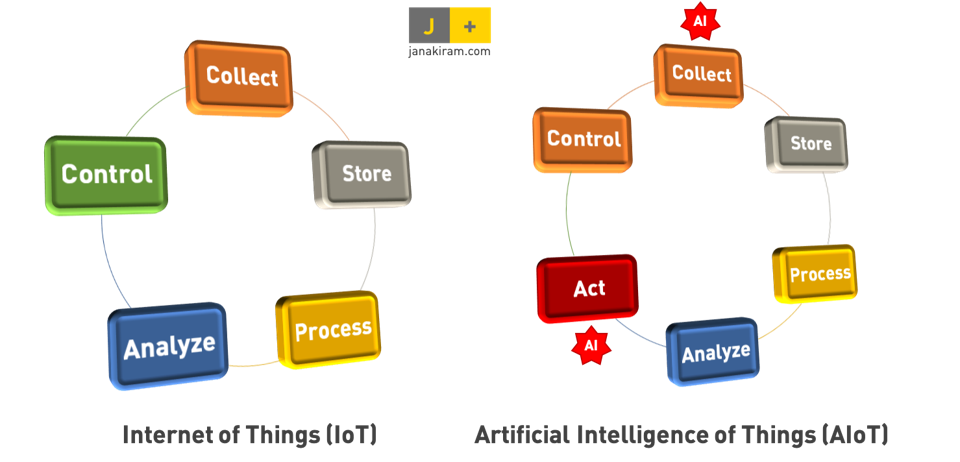

Machine Learning is making significant strides in shaping Virtual Power Plants (VPPs). Here are some ways in which Machine Learning is effecting change:

Predictive Analytics: Machine Learning algorithms work to analyze historical and real-time data, predicting energy demand, supply fluctuations, and market conditions. This foresight allows VPPs to optimize energy production and distribution in advance, ensuring efficiency.

Optimized Resource Allocation: Machine Learning empowers VPPs to dynamically allocate energy resources based on real-time demand. This includes the effective management of renewable energy sources, storage systems, and traditional power generation for maximum utilization.

Demand Response Optimization: Machine Learning is ramping up the ability of VPPs to take part in demand response programs. By recognizing patterns in energy consumption, the system can proactively adjust energy usage during peak times or low-demand periods, contributing to grid stability.

Fault Detection and Diagnostics: With Machine Learning algorithms, anomalies and faults in the energy system can be detected, allowing swift identification and resolution of issues, thereby improving the reliability of VPPs.

Market Participation Strategies: Machine Learning aids VPPs in developing sophisticated energy trading strategies. It analyzes market trends, pricing, and regulatory changes, enabling VPPs to make informed decisions and thereby maximizing revenue while minimizing costs.

Grid Balancing: VPPs leverage Machine Learning to balance energy supply and demand in real time. This is crucial for maintaining grid stability, particularly as the proportion of intermittent renewable energy sources increases.

Energy Storage Optimization: Machine Learning optimizes the use of energy storage systems within VPPs, determining the most effective times to store and release energy, which enhances storage solution efficiency. Additionally, ML algorithms can also predict battery degradation and optimize maintenance schedules.

Cybersecurity: Machine Learning plays a critical role in enhancing the cybersecurity of VPPs. It continuously monitors for unusual patterns or potential threats, providing a robust line of defense. In the ever-evolving world of technology, the partnership between Machine Learning and VPPs is proving to be a game-changer.

Challenges and Opportunities

As with any technological advancements this transition comes with its own set of difficulties. For instance, the management and security of the massive amounts of data generated from various energy sources is a significant challenge. Privacy becomes a crucial concern and necessitates robust cybersecurity measures. Furthermore, the complexity involved in executing Machine Learning algorithms requires a skilled workforce, and ongoing training becomes indispensable to harness the full potential of these technologies.

However, amid these challenges, there are several noteworthy opportunities. Machine Learning brings predictive analytics to the table, offering the possibility to optimize energy production and consumption, which leads to increased efficiency. VPPs, coordinating distributed energy resources, open the door to more resilient and decentralized energy systems. The integration of renewable energy sources is a substantial opportunity, promoting sustainability while reducing environmental impact.

Machine Learning also optimizes energy trading strategies within VPPs, paving the way for novel economic models and revenue streams for energy producers. In essence, while data management, security, and skill requirements present challenges, the amalgamation of Machine Learning and VPPs offers a promising opportunity to revolutionize energy systems. It holds the potential to make these systems more efficient, sustainable, and responsive to the evolving demands of the future.

Companies Using Machine Learning in Virtual Power Plants

Kraftwerke: The world’s largest open market for power and flexibility. The company has been a leader in the integration of Machine Learning techniques in energy management systems. By using ML algorithms in their VPPs, they can accurately forecast energy demand and produce a balance between energy supply and demand in real time.

AutoGrid: Offering flexibility management solutions to optimize distributed energy resources (DERs), hence improving grid reliability. Enbala, now a part of Generac, has also adopted Machine Learning for its distributed energy platform, concentrating on enhancing the performance of DERs within VPPs.

Siemens: Has been involved in projects that incorporate Machine Learning into VPPs, aiming to boost the efficiency and flexibility of power systems through advanced analytics. Similarly, Doosan GridTech harnesses machine learning and advanced controls to optimize the performance of distributed energy resources, focusing on improving the reliability and efficiency of VPPs.

Advanced Microgrid Solutions (AMS): Has implemented Machine Learning algorithms to fine-tune the operations of energy storage systems within VPPs. Their platform is designed to provide grid services and maximize the value of DERs. ABB, a pioneer in power and automation technologies, has delved into Machine Learning applications in VPP management and control, with solutions concentrating on grid integration and optimization of renewable energy sources.

General Electric (GE): A multinational conglomerate, is also involved in projects that apply Machine Learning for the optimization and control of DERs within VPPs, bringing their vast industry knowledge to the table.

Future Possibilities

Looking ahead, the fusion of Machine Learning and Virtual Power Plants (VPPs) is poised to revolutionize the global energy landscape. The predictive analytics capabilities of Machine Learning hint at a future where energy systems are highly adaptive and able to forecast demand patterns accurately and proactively. The potential for VPPs, supercharged by Machine Learning algorithms, points towards a future where energy grids are fully optimized and decentralized.

The integration of renewable energy sources, enhanced by advanced Machine Learning technologies, promises a future where sustainable energy production is standard practice, not an exception. The refinement of energy trading strategies within VPPs could herald a new era of economic models, fostering innovative revenue generation avenues for energy producers.

As these technologies continue to mature and evolve, the future of energy looks dynamic and resilient, with Machine Learning and VPPs serving as key pivots in delivering efficiency, sustainability, and adaptability. Together, they are set to cater to the ever-changing demands of the global energy landscape, heralding an era of unprecedented progress and potential.

In conclusion, Machine Learning is driving the development of Virtual Power Plants, and the integration of ML technology in VPPs will lead to an effective, efficient, and sustainable energy system. The benefits of Machine Learning in VPPs are numerous, and the use of intelligent algorithms will ensure that the energy is distributed evenly, reduce energy costs, and enable the VPP to adapt to changing energy market demands. With its promising potential to increase reliability, reduce costs, and lower CO2 emissions, Machine Learning in Virtual Power Plants is indeed the future of energy operations.