Navigating the labyrinth of technological advancement, particularly in the domain of databases, is not only pertinent but also profoundly engaging. As we step into the throes of 2024, the digital infrastructure of businesses is revolutionized by an array of databases, each a juggernaut in its own right. In this cosmos of data storage and retrieval, uncovering the top database technologies is crucial for tech executives and enthusiasts alike. Within this ensemble of digital titans, several database technologies rise to prominence as the pillars of data management. In this blog we meticulously compare and contrast the premier databases of the year to discern their unique strengths and areas of application.

MySQL:

MySQL remains a top choice for reliability and cost-effectiveness, known for its ease of deployment and management. Its steadfast commitment to ACID (atomicity, consistency, isolation, and durability) principles ensures the highest level of reliability, essential for modern enterprises. MySQL’s extensive community support and compatibility with various operating systems make it an ideal solution for businesses of all sizes.

Beyond these fundamentals, MySQL offers an array of fast-loading utilities accompanied by various memory caches, streamlining the maintenance and administration of servers. Its compatibility with an extensive list of programming languages, with a notable synergy with PHP, means that it can slide seamlessly into almost any technology stack. Moreover, performance is a front-runner, as MySQL delivers high-speed results without having to sacrifice any of its core functionalities.

MySQL, offered by Oracle, provides a balance between cost efficiency and performance. Pricing ranges from $2,140 to $6,420 for 1-4 socket servers and $4,280 to $12,840 for setups over five sockets. Its open-source nature under the GNU GPL enables free usage and customization. Explore MySQL at https://www.mysql.com/.

PostgreSQL:

PostgreSQL ensures Data Integrity with Primary Keys, Foreign Keys, Explicit Locks, Advisory Locks, and Exclusion Constraints. These features orchestrate data access, ensuring transaction consistency and fortifying the database’s resilience against anomalies. PostgreSQL excels in SQL features with Multi-Version Concurrency Control (MVCC) for high throughput. It handles complex SQL queries, including full support for SQL Sub-selects, appealing to SQL aficionados. Streaming Replication ensures high availability and disaster recovery.

In stark contrast to many other database technologies, PostgreSQL stands as an emblem of community-driven innovation, provided free of charge. This enterprise-grade database system, while lacking a traditional price tag, does not skimp on capabilities, owing to its development and continued refinement to a dedicated cohort of volunteers and backing enterprises. It prides itself on complete freedom of use under the liberal open-source PostgreSQL License. To peek into the extensive world of PostgreSQL or to become part of its vibrant community, head over to https://www.postgresql.org/.

Microsoft SQL Server:

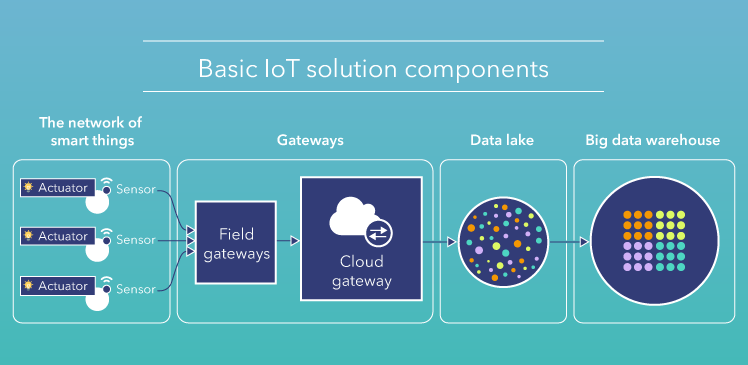

Microsoft SQL Server stands not just as a pillar in the realm of traditional RDBMS (Relational Database Management System Software) but also as an architect of the ever-expanding Big Data landscape. Harnessing its prowess, organizations can construct their elite Data Lakes, serving as grand repositories that amalgamate disparate data, structured or not, into a cohesive pool. This confluence of data empowers users to embark on insightful expeditions across their entire datasets, directly querying the vast lakes without the onerous need to move or replicate precious data.

In the name of security—a paramount concern in today’s data-driven universe—Microsoft SQL Server brings its A-game with cutting-edge tools for data classification, protection, and monitoring. It stands vigilant, constantly scanning for anomalies, and is a fortifying digital fortress with timely alerts on any suspicious activities, security gaps, or configuration errors.

Microsoft SQL Server’s graphical tool empowers users to design, create tables, and explore data without intricate syntax. It seamlessly integrates data from various sources via an extensive connector library. With new transformations in the SQL Server Analysis Services (SSAS) Tabular Model, users gain advanced capabilities to manipulate and combine data.

Microsoft SQL Server offers diverse editions to suit various enterprise needs. The Enterprise edition is priced at $15,123, while the Standard edition offers options like per-core pricing ($3,945), server pricing ($989), and CAL option ($230). Volume licensing and hosting channels further influence pricing. Learn more at https://www.microsoft.com/en-us/sql-server/sql-server-2022-pricing.

MongoDB:

MongoDB’s architecture ensures that scaling to meet the demands of growing data is never an impediment. Thanks to its intrinsic scalability, it can flaunt clusters that burgeon past the hundred-node mark effortlessly, managing millions of documents without breaking a sweat. Its widespread adoption across a myriad of industries is a testament to MongoDB’s capability to handle expansive and intricate datasets. MongoDB ensures high availability through replica sets, guaranteeing uninterrupted service and data durability. In cases of hardware failure, it redistributes load across servers or duplicates data to maintain operational integrity, ensuring a seamless user experience.

MongoDB, a prominent figure in the NoSQL landscape, provides a free entry point through MongoDB Atlas’s perpetual free tier. Celebrated for scalability and developer-friendliness, MongoDB remains a strong player in data management. Discover more at https://www.mongodb.com/pricing.

Oracle:

Oracle’s resilience and data recovery features are vital for uninterrupted business operations. Real Application Clusters (RAC) ensure high availability by enabling multiple instances on different servers to access a single database. This fault-tolerant and scalable setup underscores Oracle’s commitment to continuous operation, even during server failures.

Oracle’s service offerings cater to a wide array of demands, providing precise solutions for diverse business requirements. Starting with the Oracle Database Standard Edition, which offers essential features for typical workloads, users can scale up to the Enterprise Edition for more comprehensive capabilities. Additionally, Oracle provides specialized tiers such as the High Performance and Extreme Performance editions, designed to meet the demands of high-throughput and mission-critical environments.

Each tier is carefully crafted to deliver optimal performance and reliability, ensuring that businesses can effectively manage their data infrastructure. Furthermore, Oracle’s pricing structure accommodates varying usage scenarios, with options for flexible scaling based on virtual CPU (vCPU) usage. To review Oracles pricing structure click here.

Remote Dictionary Server (Redis):

Redis shines in caching and in-memory data handling, offering unparalleled speed and versatility. Supporting various data structures like strings, lists, hashes, bitmaps, HyperLogLogs, and sets, Redis caters to the diverse needs of modern applications. Moreover, Redis seamlessly integrates with popular programming languages like Java, Python, PHP, C, C++, and C#, ensuring compatibility across different development environments and accelerating data-intensive operations.

Redis offers a dynamic ecosystem where free open-source frameworks coexist with commercial variants. While the community version is free, enterprise solutions like Redis Enterprise, with enhanced features and support, operate on a subscription model. Explore Redis offerings on their website.

Elasticsearch:

Elasticsearch represents the epitome of scalability seamlessly embraced in its design. Out-of-the-box distributed systems are the backbone of its architecture, enabling data to be reliably shared across multiple servers and nodes, hence bolstering higher availability and resilience in the face of demanding workloads. This design decision is not just a matter of capability; it represents a promise of reliability, ensuring that as data volumes escalate, Elasticsearch stands prepared to accommodate the surge seamlessly.

Elasticsearch, a prominent member of the NoSQL ecosystem, adopts a dual licensing model, offering users the choice between the Server Side Public License (SSPL) or the Elastic License. This flexibility allows organizations to select the licensing option that best fits their needs and compliance requirements. In addition to its licensing options, Elasticsearch introduces a unique billing system based on Elastic Consumption Units (ECUs), which aligns usage with expenditure. This innovative approach enables organizations to scale their Elasticsearch deployments elastically according to their requirements, without being bound by traditional fixed licensing models. To review Elasticseach’s pricing structure click here .

Cassandra:

Cassandra excels in fine-tuning consistency levels for data operations, allowing developers to balance performance and accuracy. Its column-family data model adeptly handles semi-structured data, providing structure without compromising schema flexibility. With the Cassandra Query Language (CQL), which resembles SQL, transitioning from traditional databases is simplified. This, coupled with standard APIs, positions Cassandra as a scalable, reliable, and user-friendly database choice, lowering adoption barriers for tech teams.

Apache Cassandra, rapidly rising in the NoSQL landscape, is renowned for its free and open-source nature. For enterprise-grade support and features, commercial vendors offer options. Amazon Keyspaces (for Apache Cassandra) provides decentralized storage, billing $1.45 per million write request units and $0.29 per million read request units. Explore more at https://cassandra.apache.org/_/index.html.

MariaDB:

MariaDB stands out with advanced features like microsecond precision, crucial for time-sensitive applications, table elimination for optimized query processing, scalar subqueries for enhanced functionality, and parallel replication for faster database synchronization and analytics. Its compatibility with major cloud providers simplifies deployment and management in cloud infrastructures. MariaDB supports various storage engines, including Aria for crash safety, MyRocks for efficiency, and Spider for sharing across servers, offering flexibility and functionality for diverse data types and use cases.

MariaDB is a forerunner in the open-source database community maintaining its allegiance to cost-effectiveness and robust efficiency. Ideal for newcomers to the AWS ecosystem, the Amazon RDS for MariaDB invites users with its introductory Free Tier – inclusive of substantive resources to pilot their database endeavors. For details on how to harness this SQL-driven database for your transformative projects, consult MariaDB’s central hub.

IBM DB2:

DB2 is a trusted enterprise data server, facilitating seamless deployment across on-premises data centers and public/private clouds. Its flexibility enables organizations to adopt hybrid data management approaches aligned with operational needs and strategic goals. DB2 excels in data compression, featuring adaptive compression, value compression, and archive compression, significantly reducing storage footprints and costs. Administrative tasks are simplified with DB2’s self-tuning and self-optimizing features, driven by machine learning. This ensures optimal database performance with minimal intervention, reducing time and effort for routine maintenance and tuning.

IBM’s offering in the data management framework, DB2, acquaints users with its prowess through a complimentary trial on the IBM Cloud. This gesture extends to a no-strings-attached experience, with an available upgrade to a paid tier at USD 99 per month, comprehensive of full functionality and sweetened by USD 500 in credits. Questers of this high-caliber database solution can embark on their journey by navigating to https://www.ibm.com/products/db2/pricing.

SQLite:

SQLite’s appeal lies in its simplicity and ease of use. It operates without a separate server process, complex installation, or administration. A complete SQL database is stored in a single cross-platform disk file, requiring no configuration, making it highly portable and ideal for simplicity-focused scenarios. Additionally, SQLite adheres broadly to SQL standards, supporting commands like SELECT, INSERT, UPDATE, and DELETE, making it familiar to developers accustomed to other SQL databases. Being in the public domain, SQLite can be freely used and distributed without any restrictions, fees, or royalties.

SQLite, remaining loyal to the public domain, provides all-inclusive accessibility to its database solutions, with no fees levied for any usage. As the quintessence of an easily embeddable, lightweight relational database engine, SQLite is the go-to for myriad applications across the globe, with Microsoft not just embracing, but actively integrating it. Encounter SQLite in its purest form at its principal domain: SQLite.

Amazon DynamoDB:

DynamoDB provides consistent single-digit millisecond response times for large-scale applications. Its architecture allows seamless, on-demand scaling without manual intervention, ensuring performance under varying demands. Multi-AZ deployments ensure high availability and fault tolerance, with fast failovers and data replication across three Availability Zones for accessibility and security. Native support for document and key-value data models optimizes performance for distinct access patterns, enhancing efficiency.

Amazon DynamoDB offers an attractive free tier with 25 GB of storage and 25 units each of Write and Read Capacity, sufficient for managing 200 million monthly requests. Its On-Demand billing charges $1.25 per million write request units and $0.25 per million read request units, allowing flexibility to scale with demand. Learn more from Amazon’s DynamoDB guide. To review Amazon DynamoDB’s pricing structure click https://aws.amazon.com/dynamodb/pricing/.

In Conclusion

While relational databases continue to shoulder the bulk of transactional workloads, NoSQL databases have adeptly found their place in handling the complexities of semi-structured and unstructured data. The choice of database, as always, is contingent on the specific needs and nuances of individual businesses. As we continue to delve into the big data era, it is not just the deployment of databases that is vital but also the synthesis of these technologies with wider business strategies.