The integration of augmented reality (AR) and robotics has brought about countless benefits and transformed many industries. This integration of AR in robotics has proven to be a game-changer since the technology is becoming increasingly prevalent in various sectors. For instance, robots can now recognize objects in a 3D environment, allowing them to manipulate objects more effectively than ever before. This means that robots can perform tasks that would have been impossible for them to do previously.

In this blog post, we will explore the powerful impact of augmented reality in robotics and how it has become the forefront of innovation. We will dive into the effects of augmented reality technology on the robotics industry, including new developments, and increased efficiency.

Increased Efficiency

Using AR, robots can identify, locate and sort objects quickly and accurately, resulting in an improvement in performance and overall productivity. For instance, AR technology used in manufacturing has enabled robots to minimize errors in assembly lines. The robots can recognize a product and its details and perform assigning tasks with precision and accuracy. This minimizes errors, and the time spent on the task and thus increasing overall productivity outcomes. Below are some examples of how AR is further shaping the field of robotics:

Robot Programming:

AR can simplify the programming of robots by overlaying intuitive graphical interfaces onto the robot’s workspace. This allows operators to teach robots tasks by physically demonstrating them, reducing the need for complex coding and making it accessible to non-programmers.

Maintenance and Troubleshooting:

When robots require maintenance or encounter issues, technicians can use AR to access digital manuals, schematics, and step-by-step repair guides overlaid on the physical robot. This speeds up troubleshooting and maintenance, reducing downtime.

Training and Simulation:

AR-based training simulators provide a safe and cost-effective way to train robot operators. Trainees can interact with virtual robots and practice tasks in a simulated environment, which helps them become proficient in operating and maintaining actual robots more quickly.

Remote Operation and Monitoring:

AR allows operators to remotely control and monitor robots from a distance. This is particularly useful in scenarios where robots are deployed in hazardous or inaccessible environments, such as deep-sea exploration or space missions.

Quality Control and Inspection:

Robots equipped with AR technology can perform high-precision inspections and quality control tasks. AR overlays real-time data and images onto the robot’s vision, helping it identify defects, measure tolerances, and make real-time adjustments to improve product quality.

Inventory Management:

In warehouses and manufacturing facilities, AR-equipped robots can efficiently manage inventory. They use AR to recognize and locate items, helping in the organization, picking, and restocking of products.

Teleoperation for Complex Tasks:

For tasks that require human judgment and dexterity, AR can assist teleoperators in controlling robots remotely. The operator can see through the robot’s cameras, receive additional information, and manipulate objects in the robot’s environment, such as defusing bombs or performing delicate surgical procedures.

Robotics Research and Development:

Researchers and engineers working on robotics projects can use AR to visualize 3D models, simulations, and data overlays during the design and development phases. This aids in testing and refining robotic algorithms and mechanics.

Robot Fleet Management:

Companies with fleets of robots can employ AR to monitor and manage the entire fleet efficiently. Real-time data and performance metrics can be displayed through AR interfaces, helping organizations optimize robot usage and maintenance schedules.

Top Companies that Utilize Augmented Reality in Robotics

AR technology is widely adopted by companies worldwide to boost sales in their robotics systems. Notable players in this arena include Northrop Grumman, General Motors, and Ford Motor Company. Within the automotive industry, reliance on robotic systems is significant, and the integration of AR technology has yielded enhanced efficiency and reduced operating costs. Moreover, experts anticipate that AR technology could slash training time by up to 50% while boosting productivity by 30%.

These are a few instances of companies that employ augmented reality (AR) in the field of robotics:

- iRobot: iRobot, the maker of the popular Roomba vacuum cleaner robots, has incorporated AR into its mobile app. Users can use the app to visualize cleaning maps and see where their Roomba has cleaned, providing a more informative and interactive cleaning experience.

- Universal Robots: Universal Robots, a leading manufacturer of collaborative robots (cobots), offers an AR interface that allows users to program and control their robots easily. The interface simplifies the setup process and enables users to teach the robot by simply moving it through the desired motions.

- Vuforia (PTC): PTC’s Vuforia platform is used in various industries, including robotics. Companies like PTC provide AR tools and solutions to create interactive maintenance guides, remote support, and training applications for robotic systems.

- KUKA: KUKA, a global supplier of industrial robots, offers the KUKA SmartPAD, which incorporates AR features. The SmartPAD provides a user-friendly interface for controlling and programming KUKA robots, making it easier for operators to work with the robots.

- RealWear: RealWear produces AR-enabled wearable devices, such as the HMT-1 and HMT-1Z1, which are designed for hands-free industrial use. These devices are used in robotics applications for remote support, maintenance, and inspections.

- Ubimax: Ubimax offers AR solutions for enterprise applications, including those in robotics. Their solutions provide hands-free access to critical information, making it easier for technicians to perform maintenance and repairs on robotic systems.

- Vicarious Surgical: Vicarious Surgical is developing a surgical robot that incorporates AR technology. Surgeons wear AR headsets during procedures, allowing them to see inside the patient’s body in real-time through the robot’s camera and control the robot’s movements with precision.

Collaborative Robotics

Collaborative robots, also known as cobots, are rapidly gaining traction across various industries. By leveraging augmented reality (AR), human workers can effortlessly command and interact with cobots, leading to improved tracking and precision. This collaborative synergy brings forth a multitude of advantages, such as error identification and prompt issue resolution. Consequently, this approach streamlines and optimizes manufacturing processes, ushering in enhanced efficiency and productivity.

Examples of Augmented Reality (AR) in Collaborative Robotics

Assembly and Manufacturing Assistance:

AR can provide assembly line workers with real-time guidance and visual cues when working alongside cobots. Workers wearing AR glasses can see overlays of where components should be placed, reducing errors and increasing assembly speed.

Quality Control:

In manufacturing, AR can be used to display quality control criteria and inspection instructions directly on a worker’s AR device. Cobots can assist by presenting parts for inspection, and any defects can be highlighted in real-time, improving product quality.

Collaborative Maintenance:

During maintenance or repair tasks, AR can provide technicians with visual instructions and information about the robot’s components. Cobots can assist in holding or positioning parts while the technician follows AR-guided maintenance procedures.

Training and Skill Transfer:

AR can facilitate the training of workers in cobot operation and programming. Trainees can learn how to interact with and program cobots through interactive AR simulations and tutorials, reducing the learning curve.

Safety Enhancements:

AR can display safety information and warnings to both human workers and cobots. For example, it can highlight no-go zones for the cobot, ensuring that it avoids contact with workers, or provide real-time feedback on human-robot proximity.

Collaborative Inspection:

In industries like aerospace or automotive manufacturing, workers can use AR to inspect large components such as aircraft wings or car bodies. AR overlays can guide cobots in holding inspection tools or cameras in the correct positions for thorough examinations.

Material Handling:

AR can optimize material handling processes by showing workers and cobots the most efficient paths for transporting materials. It can also provide real-time information about inventory levels and restocking requirements.

Dynamic Task Assignment:

AR systems can dynamically assign tasks to human workers and cobots based on real-time factors like workload, proximity, and skill levels. This ensures efficient task allocation and minimizes downtime.

Collaborative Training Environments:

AR can create shared training environments where human workers and cobots can practice collaborative tasks safely. This fosters better teamwork and communication between humans and robots.

Multi-robot Collaboration:

AR can help orchestrate the collaboration of multiple cobots and human workers in complex tasks. It can provide a centralized interface for monitoring, controlling, and coordinating the actions of multiple robots.

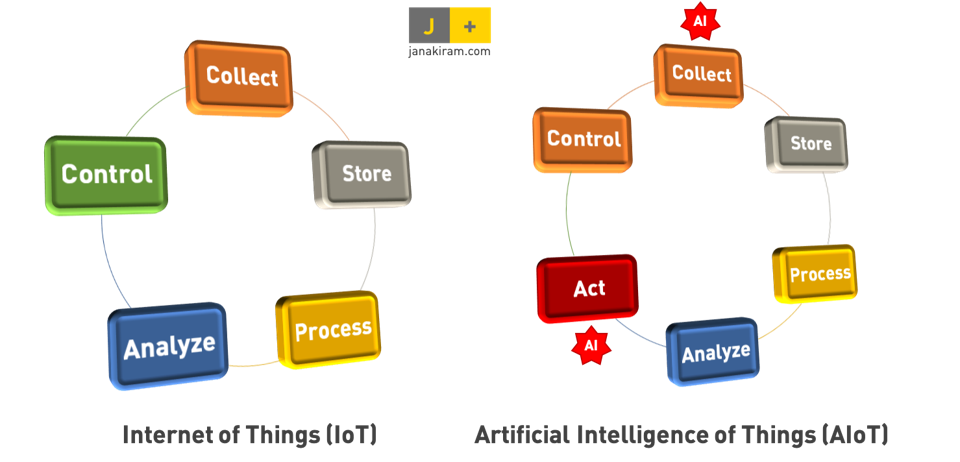

Data Visualization

AR can display real-time data and analytics related to cobot performance, production rates, and quality metrics, allowing workers to make informed decisions and adjustments. These are just some of the ways that AR can be used to optimize collaborative robotics applications. By taking advantage of AR-enabled solutions, companies can improve efficiency in their operations and reduce downtime. With its ability to facilitate human-robot collaboration and enhance safety protocols, AR is an invaluable tool for unlocking the potential of cobots in industrial use cases.

Augmented reality (AR) technology is the cornerstone of robotics development. It seamlessly brings together various elements, resulting in an enhanced human-robot interaction. By integrating AR into robotics, efficiency is increased, and errors are eliminated. Successful examples of AR integration in robotic systems serve as proof of the substantial benefits it brings to diverse industries, including manufacturing, healthcare, automotive, and entertainment. The challenge for businesses now lies in identifying the significant opportunities that this technology offers and harnessing them for optimal benefits.