Biotechnology has revolutionized the field of medical diagnostics and imaging by providing more accurate, efficient, and personalized healthcare solutions. It leverages cutting-edge technologies like genomics, proteomics, and molecular imaging to detect diseases at their earliest stages, thereby improving patient outcomes. The integration of artificial intelligence and machine learning further amplifies the capabilities of biotech solutions, enabling faster analysis and interpretation of complex medical data.

This convergence of biotechnology and technology not only accelerates diagnostic processes but also enhances the precision of imaging, aiding in better treatment planning. As the healthcare landscape continues to evolve, the adaptability and innovation brought forth by biotech advancements signify a paradigm shift. This compels industry leaders to stay abreast of the latest developments to harness the full potential of these transformative tools.

The Rise of Bio-Tech in Medical Diagnostics

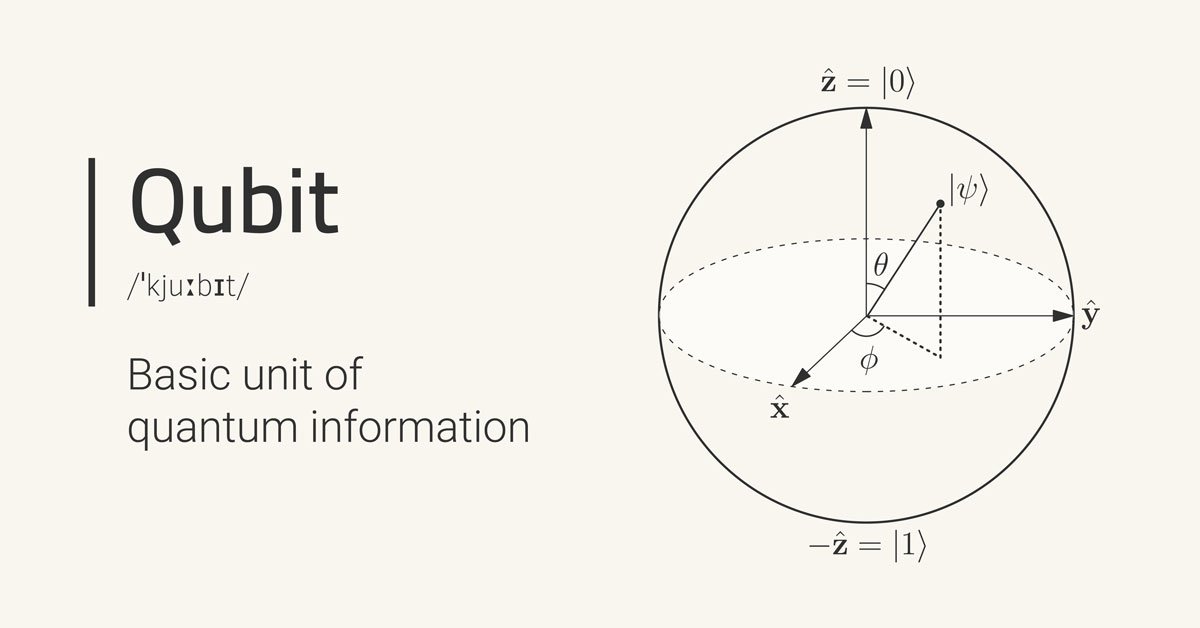

Biotechnology in medical diagnostics has opened new doors for early disease detection and personalized treatment plans. By leveraging advanced techniques like molecular biology and bioinformatics, healthcare professionals can achieve more accurate diagnoses. For instance, genomics has enabled the identification of genetic markers for various diseases, allowing for targeted interventions. This precision not only improves patient outcomes but also reduces the trial-and-error approach traditionally associated with diagnostics.

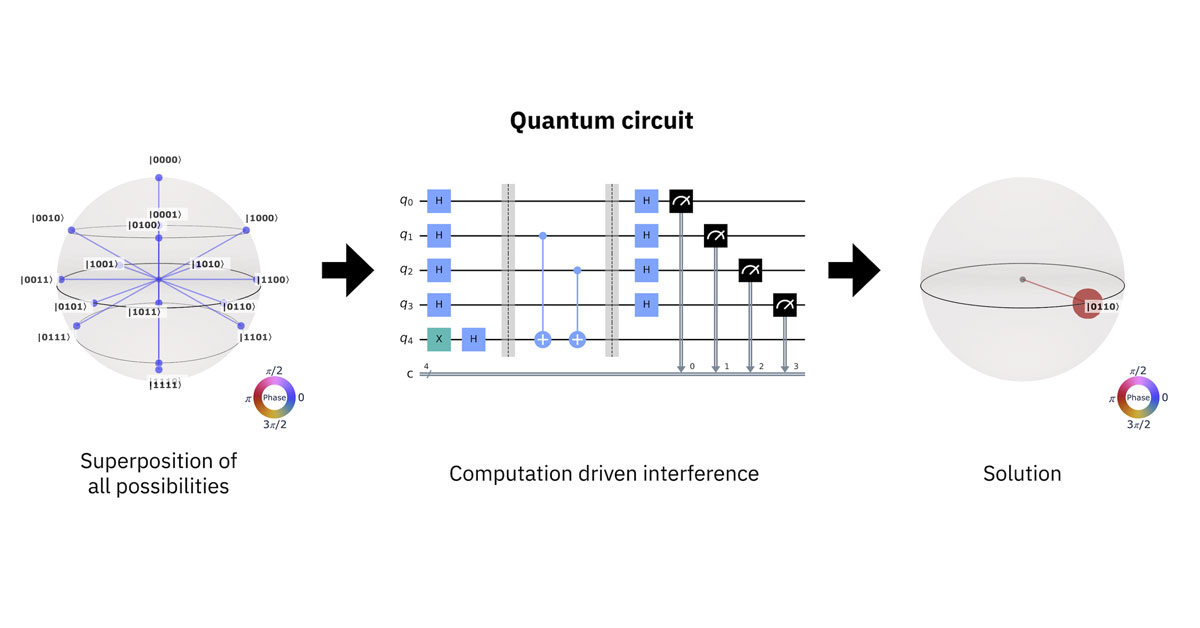

The integration of biotech in diagnostics also streamlines processes, significantly cutting down the time required for disease detection. Automated systems and machine learning algorithms now handle complex data analysis, providing quick and reliable results to healthcare providers. This efficiency enhances the overall quality of care, allowing doctors to focus more on patient interaction and less on administrative tasks.

Furthermore, the use of biotech in diagnostics promotes proactive health management. Regular screenings powered by advanced diagnostic tools can help detect potential health issues before they become critical. By identifying risks early, patients can take preventive measures, leading to healthier lifestyles and reduced healthcare costs.

How Bio-Tech is Transforming Medical Imaging

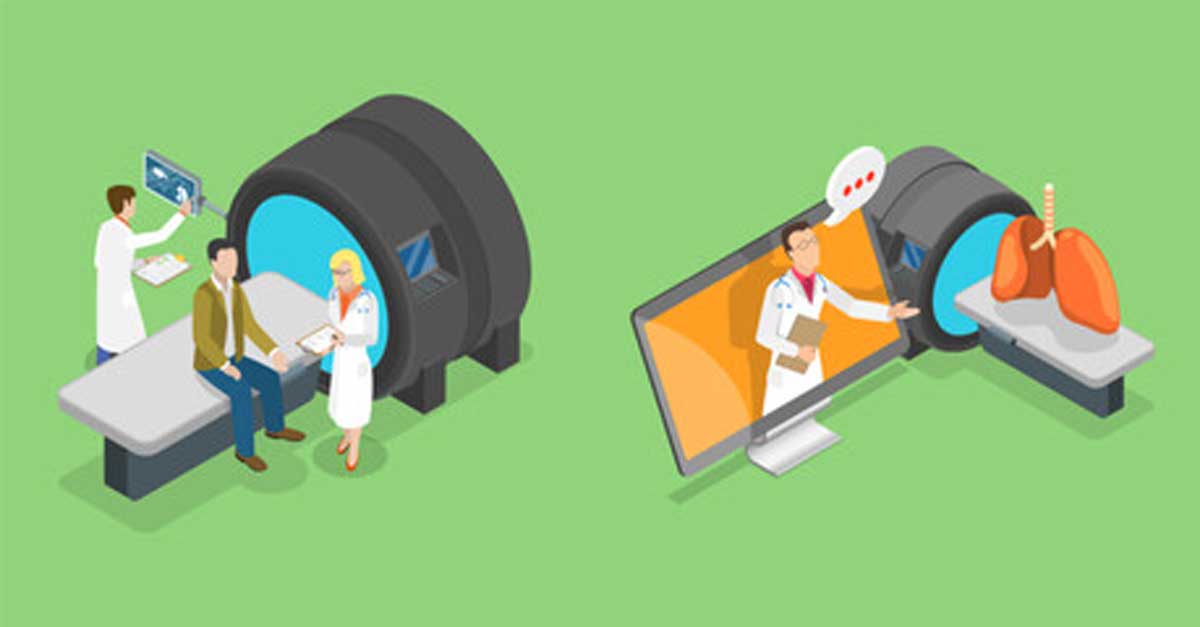

Medical imaging has undergone a revolutionary transformation with the advent of biotech innovations. Techniques such as MRI, CT scans, and ultrasound have been enhanced with biotechnology, offering clearer, more detailed images. These advancements enable doctors to detect abnormalities that were once impossible to see, improving diagnostic accuracy.

One of the most exciting developments in medical imaging is the use of AI and machine learning. These technologies analyze imaging data to identify patterns and anomalies with unprecedented precision. For example, AI algorithms can detect minute changes in tumor size or structure, aiding in early cancer detection and monitoring.

Additionally, biotech advancements have led to the development of novel imaging agents that target specific tissues or cells. These agents provide enhanced contrast in images, making it easier to differentiate between healthy and diseased tissue. This precision is particularly beneficial in complex cases, such as neurological disorders, where traditional imaging methods may fall short.

Medical imaging powered by biotech also plays a crucial role in guiding surgical procedures. Real-time imaging offers surgeons a detailed view of the operating field, reducing the risk of complications and enhancing surgical precision. This capability is invaluable in minimally invasive surgeries, where accuracy is paramount.

Advantages of Biotechnology in Diagnostics and Imaging

The integration of biotechnology into medical diagnostics and imaging has ushered in a new era of enhanced accuracy and precision. By utilizing advanced technologies such as genomics, proteomics, and molecular imaging, healthcare practitioners can now detect diseases at an earlier stage than ever before. This early detection is critical for conditions like cancer and genetic disorders, where timely intervention can dramatically improve patient outcomes and survival rates. Moreover, biotechnology enables the development of personalized treatment plans tailored to individual patients. By understanding the genetic and molecular makeup of a patient’s disease, doctors can prescribe therapies that are more effective and have fewer side effects.

In addition to personalized care, the speed of diagnostics has vastly improved with biotech innovations. Automated systems powered by artificial intelligence and machine learning are capable of analyzing vast amounts of data in mere seconds. This rapid processing allows healthcare providers to conduct timely interventions and potentially save lives. Fast diagnosis is particularly essential in emergencies, where every second counts. Overall, the swift advancements in biotech are redefining the landscape of healthcare, making it more efficient and patient-centric.

Challenges and Considerations

While the advantages of biotechnology are clear, there are significant challenges that need addressing. One major hurdle is the substantial investment required in both infrastructure and training. Cutting-edge technology demands updated facilities and equipment, often accompanied by high costs, which can be prohibitive for smaller healthcare facilities. These financial constraints can lead to disparities in healthcare access, with smaller or rural centers unable to afford the latest innovations.

Another critical consideration is the ethical implications associated with AI and machine learning in healthcare. The reliance on these technologies raises concerns about data privacy, as sensitive patient information must be protected against breaches. Furthermore, algorithmic bias poses a risk, as AI systems may reflect or exacerbate existing biases in medical decision-making if not carefully managed and tested. Ensuring fairness and accuracy in algorithm development is paramount to avoid negative outcomes for patients.

Balancing Benefits and Challenges

To capitalize on the transformative potential of biotechnology in medical diagnostics and imaging, strategic approaches must be adopted to address inherent challenges effectively. This begins with fostering collaboration among key stakeholders, including governmental agencies, healthcare institutions, technology developers, and patient advocacy groups. By working together, these entities can develop comprehensive solutions that enhance regulatory compliance, ensure data security, and eliminate biases within AI systems.

Investment in education and training is equally crucial. As biotechnology continues to evolve rapidly, healthcare professionals must be equipped with the necessary skills to operate new technologies and interpret their results accurately. Training programs should be developed in tandem with technological advancements to ensure that medical practitioners remain at the forefront of innovation, facilitating seamless integration into clinical practice.

Additionally, targeted investments in infrastructure are essential to bridge the gap between large urban centers and underserved rural or remote areas. Initiatives to subsidize costs for advanced equipment and software can play a major role in leveling the playing field, ensuring that patients everywhere have access to state-of-the-art diagnostic and imaging services. Moreover, promoting the development of portable and cost-effective imaging solutions could revolutionize healthcare delivery by making cutting-edge technology accessible to a broader patient base.

Collaboration between the public and private sectors is paramount in driving innovation forward. Public funding can support foundational research and development, while private industry plays a vital role in commercializing and scaling up new technologies. These partnerships can drive the development of policies that promote equitable access and address ethical concerns while harnessing the power of biotechnology to improve patient outcomes.

Lastly, public awareness and patient education regarding biotechnology’s capabilities and limitations are essential. An informed patient population can actively participate in their healthcare decisions and advocate for the adoption of beneficial innovations. Through these strategic efforts, the healthcare industry can effectively harness the benefits of biotechnology in diagnostics and imaging, ensuring that the advancements lead to a more inclusive, efficient, and responsive healthcare system.

Companies Leading Innovation in Biotechnology Diagnostics and Imaging

Several pioneering companies are pushing the boundaries of biotechnology in diagnostics and imaging. Roche Diagnostics is a leader in genomics and proteomics, developing diagnostic solutions that enable early disease detection and personalized medicine, allowing clinicians to tailor treatments effectively. Illumina is renowned for its sequencing technologies that have transformed genomics, facilitating comprehensive studies to identify genetic mutations for improved therapeutic strategies. Siemens Healthineers integrates molecular imaging with AI, enhancing diagnostic accuracy, particularly in oncology, where precise tumor characterization aids targeted treatment. GE Healthcare focuses on digital tools and portable imaging equipment, making healthcare technology accessible even in resource-limited settings. Thermo Fisher Scientific advances laboratory diagnostics with automated systems that deliver swift results, which are essential for prompt clinical actions.

These companies exemplify the potential of biotechnology to transform medical diagnostics and imaging, making healthcare more predictive, preventive, and personalized. By continuing to innovate and invest in developing new technologies, these industry leaders are paving the way for a future where biotechnology plays an integral role in improved patient care and outcomes.

Future Plans for Bio-Tech Medical Diagnostics and Imaging

The future of biotech medical diagnostics and imaging is a landscape teeming with promise, driven by groundbreaking research in transformative technologies. Nanotechnology stands at the forefront of this revolution, offering capabilities that could dramatically enhance imaging techniques and diagnostics. By engineering nanoparticles to selectively target diseased cells, healthcare providers can obtain unprecedentedly high-resolution images that can lead to more accurate and earlier diagnoses. This precision not only facilitates better disease management but also minimizes invasive procedures, resulting in improved patient outcomes.

In tandem with nanotechnology, the integration of wearable technology into biotech diagnostics heralds a new frontier in healthcare monitoring. Innovative wearable devices, such as smartwatches and fitness trackers, are now equipped with sophisticated sensors capable of continuous health monitoring. These devices can track vital signs like heart rate, blood pressure, and oxygen levels in real-time, alerting users and healthcare professionals to potential health anomalies. This immediacy in data collection and analysis empowers individuals to take proactive steps toward preventive care, ultimately reducing the incidence of severe health events and hospital visits.

Key to the successful advancement of these innovations is the cross-industry collaboration between technology companies and healthcare providers. By fostering partnerships and pooling expertise, these entities can overcome sector-specific challenges to create cohesive solutions that cater to the complex needs of modern healthcare. Leveraging their collective strengths, they can drive the adoption of biotech advancements at a much faster pace, ensuring that healthcare systems are not only more efficient but also accessible to a broader audience.

As these collaborative efforts gain momentum, they hold the potential to transform the landscape of patient care, making it more personalized, accurate, and responsive to the needs of each individual. By focusing on these strategic plans, the biotech industry can pave the way for revolutionary changes in medical diagnostics and imaging, fundamentally reshaping how diseases are detected, monitored, and treated.

Conclusion

As we wrap up our comprehensive discussion on the advancements in biotech medical diagnostics and imaging, the profound impact of these cutting-edge technologies on the healthcare landscape has become increasingly evident. The remarkable strides in accuracy and speed of diagnoses, coupled with the advent of personalized treatment options, underscore biotech’s role in cultivating a healthier future. Despite the inevitable challenges in their implementation, the promising advantages—the potential to enhance healthcare efficiency, effectiveness, and accessibility—far outweigh any hurdles. This concludes our insightful biotech series. We invite you to join us in our upcoming blog, How to Outsource Mobile App Development, where we will delve into essential strategies and insights designed to empower your business in the digital era. Stay tuned for more transformative conversations.